“What are the main things a modern data scientist/machine learning engineer does?”

At first glance, this seems like an easy question with an obvious answer:

“Build machine learning models and analyze data.”

In reality, this answer rarely holds true.

Efficient use of data is vital in a successful modern business. However, turning data into tangible business outcomes requires that data to go on a journey. It must be acquired, securely shared, and analyzed in its own development lifecycle.

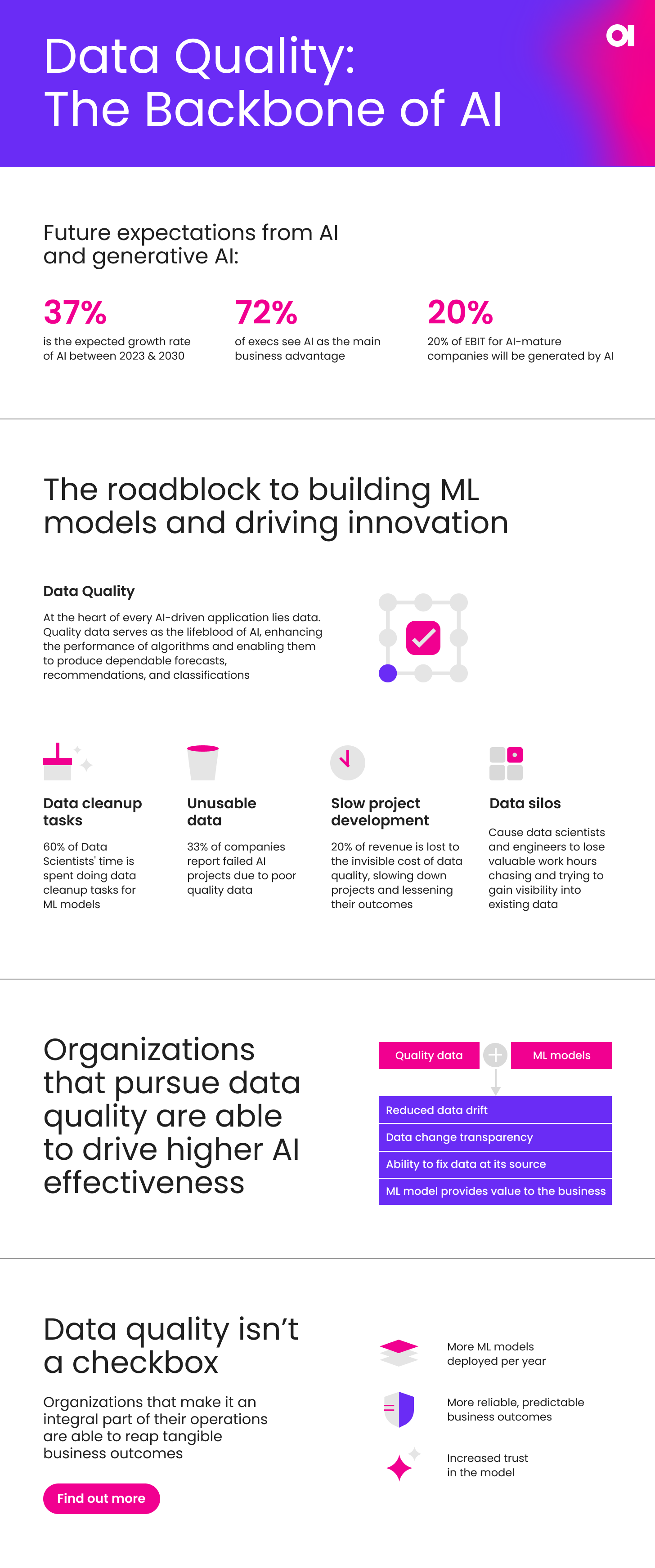

The explosion of cloud computing in the mid-late 2000s and the “enterprisification” of ML in the mid-late 2010s have made the start and end of this journey very much a solved problem. Unfortunately, businesses tend to hit roadblocks in the less exciting middle part. A stage that often lives outside of the executive levels with their big budgets and flashy slides. This is today’s subject: data quality.

How poor data quality affects businesses

Poor quality, unusable data is a burden for people who live at the end of our data's journey: the data users who use it to build models and other revenue-generating activities. The all-too-common story of data scientists is somebody hired to “build machine learning models and analyze data,” but bad data prevents them from doing anything of the sort.

All because of “garbage in, garbage out”.

And in this case, the “garbage in” is bad data quality. Organizations put so much effort and attention into getting access to this data, but nobody thinks to check if the data going “in” to the model is actually usable.

According to Forbes, data scientists spend 60% of their time cleaning up data so their project “out” will work properly. They may be guessing the meaning of data and inferring gaps. They may be dropping otherwise valuable data from their models or maybe even waiting to hear back from SMEs – the outcome is frustrating and inefficient all the same. Ultimately, all this dirty data prevents data scientists from doing the valuable part of their job: solving business problems.

This massive, often invisible cost slows down projects and lessens their outcomes.

These disadvantages double when data cleanup tasks are done repetitively in silos. Just because one person noticed and cleaned up an issue in one project doesn't mean they've resolved the issue for all their colleagues and their respective projects. Even if a data engineering team can clean up en masse, they may not be able to do so instantly and may not fully understand the meaning behind the task and why they're doing it.

Data quality's impact on machine learning

Having clean data is particularly important to ML projects. Whether we are doing classification or regression, supervised or unsupervised learning, deep neural networks, or just good old k-means, when an ML model goes into production, its builders must constantly evaluate against new data.

A key part of the ML lifecycle is checking against data drift to ensure the model still works and provides value to the business. Data is an ever-changing landscape, after all:

- Source systems may be merged after an acquisition

- New governance may come into play

- The commercial landscape can change

Previous assumptions of the data may no longer hold true. Sure, this model promotion/testing/retraining aspect is covered well by tools like Databricks/MLFlow, AWS Sagemaker, or Azure ML Studio. However, it's not as well equipped for the subsequent investigation into what part of the data has changed, why it has changed, and then going and fixing it. This is tedious and time-consuming.

So, how do you prevent these problems for ML projects? By being data-driven. Being data-driven isn’t just about technical people building pipelines and models. The entire company needs to work towards it. What if entering data required a business workflow with somebody to approve it? What if a front-office, non-technical stakeholder could contribute knowledge at the start of the data journey? And what if we could proactively monitor and clean our data?

How to overcome data quality barriers to ML

This is where Ataccama provides real value. Instead of wasting a data scientist’s time in a never-ending game of whack-a-mole, we make data quality a team sport. We don’t wait for an issue to crop up in production and scramble to investigate and fix it.

We constantly test all our data, wherever it lives, against an ever-expanding pool of known issues. Everybody contributes, and all data has clear, well-defined data owners. So when a Data Scientist is asked what they do, they can finally say:

“Build machine learning models and analyze data.”